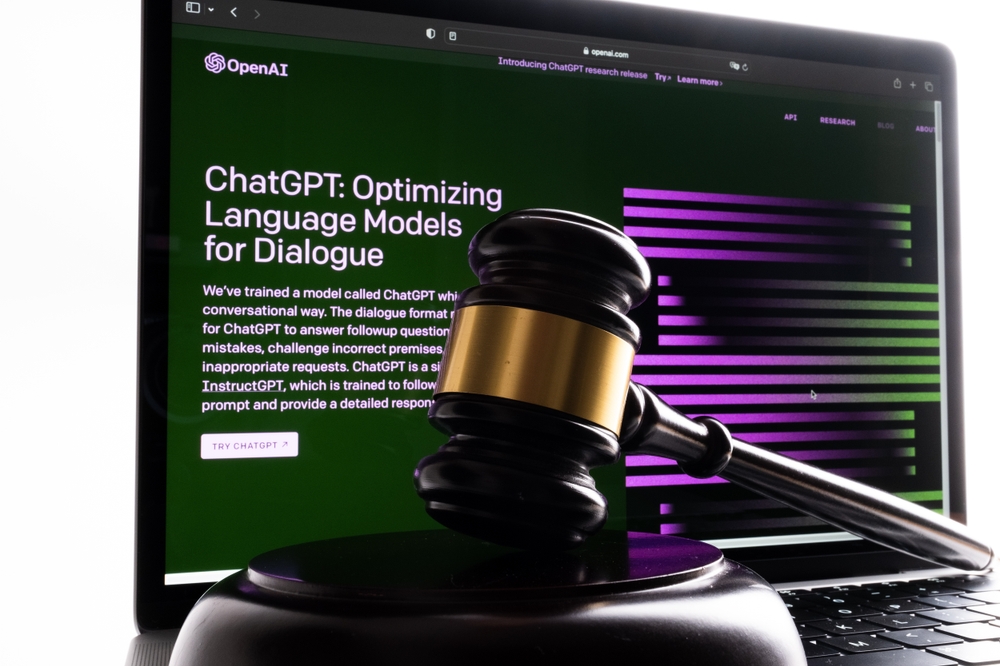

AI chatbots have long been accused of providing false information, and now the developer companies are facing possible legal liability.

The first lawsuit has been filed against OpenAI, filed by radio host Mark Walters from Georgia. He claims that ChatGPT provided misleading information referring to journalist Fred Riehl and accused him of fraud and embezzlement of funds from a non-profit organization. The lawsuit was filed June 5 in the Georgia Supreme Court and seeks damages that have not yet been determined.

This lawsuit is the first time that artificial intelligence has been sued for defamation. Although chatbots often face accusations of hallucinations and an inability to distinguish truth from fiction, users are usually simply misled or wasted time. But sometimes such mistakes can cause real harm.

Examples include the professor who threatened to flunk his class after ChatGPT incorrectly claimed that his students were using AI to write essays, and the lawyer who asked the chatbot to find evidence for the trial, which simply faked it. There is also a known case of a Ukrainian TV channel that published a fake biography based on the facts provided by ChatGPT.

However, OpenAI points out that ChatGPT can sometimes provide incorrect information, despite what the company calls it a source of reliable data and a way to learn new things. OpenAI CEO Sam Altman prefers to get new information from ChatGPT rather than from books.

There is uncertainty as to whether such companies can be held liable, as no legal instruments have yet been developed for this type of technology. Generally, the U.S. Internet Decency Act protects Internet companies from legal liability for information generated by third parties on their platforms. However, it is not clear whether these safeguards can be applied to artificial intelligence systems that generate information on their own, rather than simply referring to data sources.

Walters’ court case may help clarify this issue. When ChatGPT was asked for a court case summary PDF file, the system produced a compelling summary with false accusations. Interestingly, ChatGPT does not have access to external data such as PDFs without the use of additional plugins. Such misleading can be a problem, although when tested by journalists from The Verge, he clearly indicated his inability to access such files.

However, at present it remains unclear how AI companies can be held accountable, as the legal framework for such cases has not yet been formed, notes NIX Solutions. Therefore, the resolution of this case could have significant implications for future liability in the field of artificial intelligence.