Developers from Meta have created an algorithm that allows you to create a high-quality animated 3D head model using the front camera of your smartphone. The article will be presented at the SIGGRAPH 2022 conference.

Many large IT companies and laboratories are developing technologies for creating realistic animated 3D avatars. For example, back in 2019, Meta itself showed a spherical stand with more than a hundred cameras and light sources, which allows you to shoot a person in the center with high resolution and restore their 3D model. In many ways, the company is developing similar technologies with an eye to use in virtual reality, says N+1. In particular, in April, Meta showed the work of a new algorithm on a prototype VR helmet with five cameras aimed at different parts of the face. This allowed the algorithm to recreate a realistic face model in real time, which can be shown to other users.

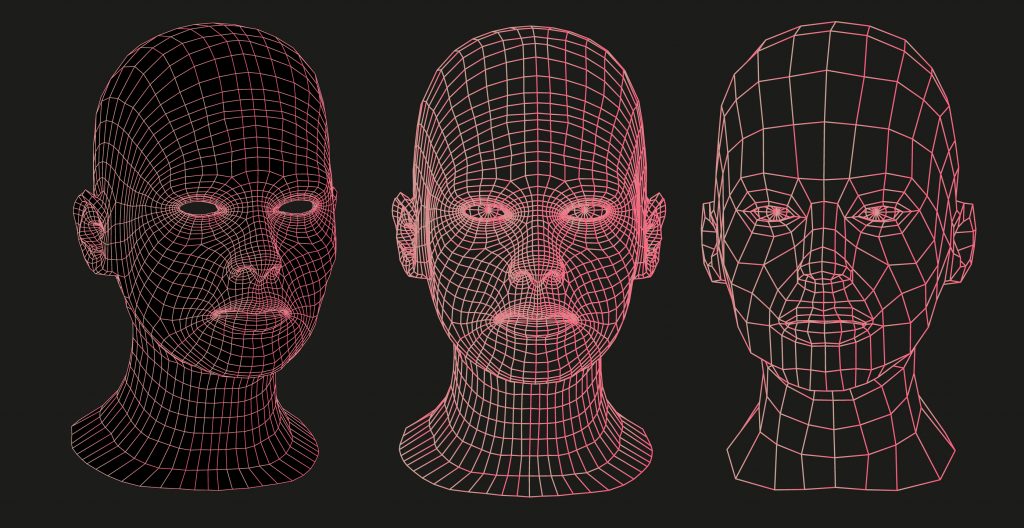

In the new work, engineers from Meta Reality Labs, led by Jason Saragih, used a large camera bench to create an algorithm that, after training, allows you to create a realistic head model using a regular smartphone. The algorithm consists of two main parts: a hypernet that learns a universal model of the head and facial expressions, and a personalized one, which is created on the basis of a universal one and learns a model for a specific person.

In order to train the universal network, engineers used a spherical stand with 40 color cameras and 50 monochrome cameras, installed within a radius of 1.2 meters from the center of the sphere, where a person sits during the shooting. The cameras shot at a resolution of 4096 by 2668 pixels. In addition to the cameras, there are 350 light sources in the stand that create uniform illumination of the entire head. During the shooting, the person in the booth had to perform 65 facial movements, look with their eyes in 25 different directions, read 50 phonetically balanced sentences, and freely move their head or parts of their face.

255 people of different gender, race and age participated in the creation of the dataset. In total, 3.1 million frames were taken during the shooting, which were then used for training.

After creating a universal model with a powerful stand, a personalized model can be created using a conventional camera. Based on camera frames, the algorithm marks key points on the face, then creates a 3D model of the head and a texture, which can then be applied to the 3D model to create a relatively realistic avatar. The next step is to create the final high-resolution personalized avatar model based on the universal network and this textured model. So that it also repeats the facial expressions of a person well, it is asked to move parts of its face in front of the camera.

The developers used to create personalized models of the iPhone 12, whose front camera, in addition to the RGB layer, also gives out a layer of depth. Despite the high quality of generation, the authors note that the algorithm still has drawbacks. In particular, after shooting, a personalized model requires several hours of training, and also does not work well with glasses and unusual hairstyles.

Creating a realistic head and face model can be used not only in virtual reality, but also for video communication, notes NIX Solutions. For example, developers from NVIDIA proposed to replace video transmission with the creation of a head model transmitted in a compressed form, and engineers from Google created a video communication system with a three-dimensional screen that does not require 3D glasses.