Low Accuracy Leads to Suspension

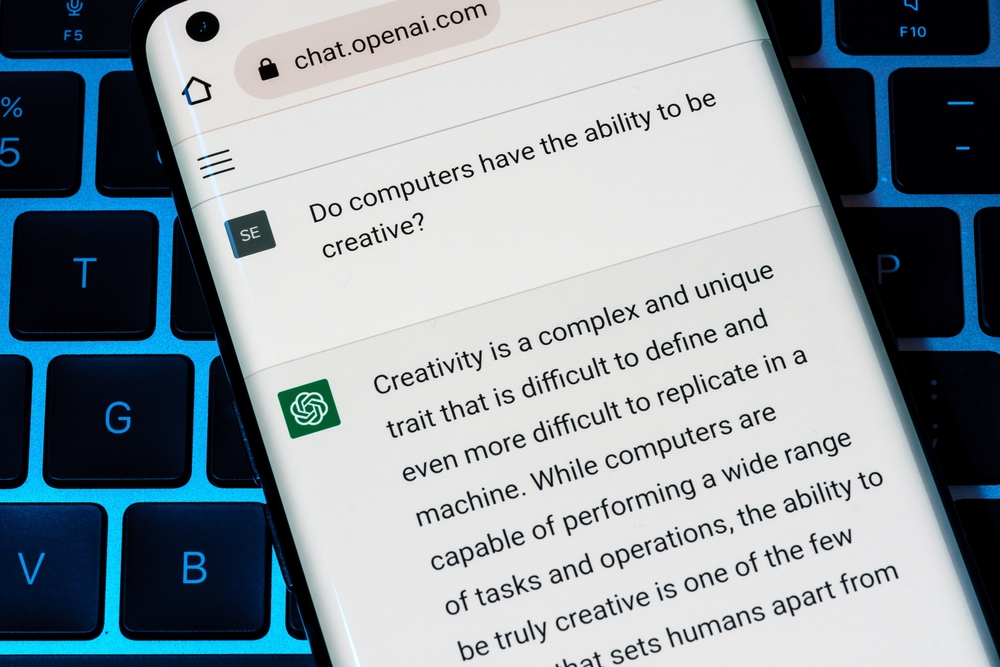

OpenAI has quietly suspended a tool designed to distinguish AI-generated text from human writing. The company admitted that the reason for this was the “low accuracy” of the algorithm’s operation.

Commitment to User Feedback and Improved Methods

OpenAI assured that user feedback will be taken into account, and more efficient methods for classifying materials are now being studied. What’s more, the company has “committed” to developing a similar tool to identify AI-generated audio and visual content. The text analysis platform was released in January 2023 – OpenAI emphasized the importance of creating systems that can detect false statements made by AI.

Research on AI-driven Disinformation Campaigns

At the same time, the company, with the support of scientists from Stanford and Georgetown Universities, published a paper that analyzed the risk of entire disinformation campaigns carried out with the help of AI. The paper says that language models have taken a big step forward, and the text they create is difficult to distinguish from human writing. Persuasive and misleading text can be generated by AI on a massive scale, becoming a weapon in the hands of attackers. “Intruders” in this case can be negligent students and marginal political forces. The authors of the work conclude that, given the general availability of AI technologies, it is now almost impossible to do something to prevent such incidents.

Challenges of Detecting Dangerous AI Models

One of the ways to deal with potentially dangerous AI models could be specialized tools for detecting materials created by generative neural networks. True, in the execution of OpenAI, this tool offered limited capabilities and low accuracy: it required manually entering text with a length of a thousand characters to later assess whether it was written by a person or AI. It successfully classified 26% of the samples as “probably written by AI” and in 9% of cases assigned the same rating to human-written texts, notes NIX Solutions. The company did not recommend using the system as a “primary decision-making tool” but still made it publicly available. The platform was disabled on July 20, and the release date of its improved version is not specified.